Sounds

attract camera

By

Chhavi Sachdev,

Technology Research NewsWhen Steven Stills penned the lyrics, “Stop! Hey, what's that sound? Everybody look what's going down," he was describing a natural phenomenon that we seldom think about consciously -- sounds make us look. When people clap, shout, or whistle to get our attention, our heads instinctively swivel towards them. Imagine the potential of a robot that reacts the same way.

University of Illinois researchers are taking steps towards that goal with a self-aiming camera that, like the biological brain, fuses visual and auditory information.

In time, machines that use vision systems like this one could be used to tell the difference between a flock of birds and a fleet of aircraft, or to zoom in on a student waving her arm to ask a question in a crowded lecture hall.

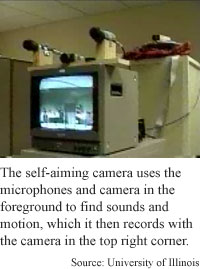

The self-aiming camera consists of a video camera, two microphones, a desktop computer that simulates a neural network, and a second camera that records chosen information. The microphones are mounted about a foot apart to mimic the dynamics of an animal’s ears.

The heart of the researchers' system is a software program inspired by the superior colliculus, a small region in vertebrates’ brains that is key in deciding which direction to turn the head in response to visual and auditory cues. The colliculus also controls eye saccades -- the rapid jumps the eyes make to scan a field of vision.

In determining where to turn the camera, the system uses the same formula that the brain of a lower level vertebrate like a barn owl uses to select a head-turning response, said Sylvian Ray, a professor of computer science at the University of the Illinois at Urbana-Champagne.

The computer picks out potentially interesting input and calculates the coordinates where sound and visual motion coincide, Ray said. "The output [is] delivered to a turntable [under] a second camera. The turntable rotates to point the second camera at the direction calculated by the neural network."

In this way, the second, self-aiming camera captures the most interesting moving object or source of noise on film for further analysis, saving a human operator the chore of sifting through all the data.

The camera re-aims every second toward the location most likely to contain whatever is making the most interesting noise. Because it always chooses an estimate of the best location for all available input, the system works even if several motions or noises happen at once, according to the researchers.

The self-aiming camera could be used to pare down meaningless data captured by surveillance systems that use several cameras to take pictures of their surroundings, according to Ray. It could be used as an intelligent surveillance device in hostile environments, and for ordinary security, he said.

To have the system differentiate among different types of inputs, the researchers plan to add specializations that will give certain inputs more weight. In nature, different vertebrates respond to particular targets; a cat likes different sounds and motions than an owl, for instance, said Ray. "One specialization of the self-aiming camera would be to train it to like to look for human activity," he said.

“This is a nice example of exploiting ideas from biology to better engineer systems since this pairing of stimuli increases the reliability and robustness of the self-aiming camera,” said John G. Harris, an associate professor of electrical and computer engineering at the University of Florida. A better understanding of the underlying neural mechanism is still needed, he said.

Eventually the system will have to deal with multiple objects as well as noise and room reflections, Harris said. “Such a system needs an attention mechanism in order to attend to objects of interest while ignoring others. This is a higher level behavior that requires different sets of neurons and is beyond the scope of the current demonstrated system,” he said.

The system could be in practical use in two to three years, according to the researchers. Ray's research colleagues were Thomas Anastasio, Paul Patton, Samarth Swarup, and Alejandro Sarmiento at the University of Illinois. The research was funded by the Office of Naval Research.

Timeline: 2 to 3 years

Funding: government

TRN Categories: Neural Networks; Computer Vision and Image Processing

Story Type: News

Related Elements: Technical paper, "Using Bayes' Rule to Model Multisensory Enhancement in the Superior Colliculus," Neural Computation 12: 1141-1164.

Advertisements:

July 25, 2001

Page One

Sounds attract camera

Interface lets you point and speak

Quantum logic counts on geometry

T-shirt technique turns out flat screens

Rating systems put privacy at risk

News:

Research News Roundup

Research Watch blog

Features:

View from the High Ground Q&A

How It Works

RSS Feeds:

News

Ad links:

Buy an ad link

| Advertisements:

|

|

Ad links: Clear History

Buy an ad link

|

TRN

Newswire and Headline Feeds for Web sites

|

© Copyright Technology Research News, LLC 2000-2006. All rights reserved.